Document scanning¶

I revisited my entry from 2014 and discovered that there have been some changes this year to my process of storing PDF documents. Shocking, I know.

While the workflow is in general still the same, I have simplified the storage and distribution, but also adjusted the input process slightly.

The main two changes are:

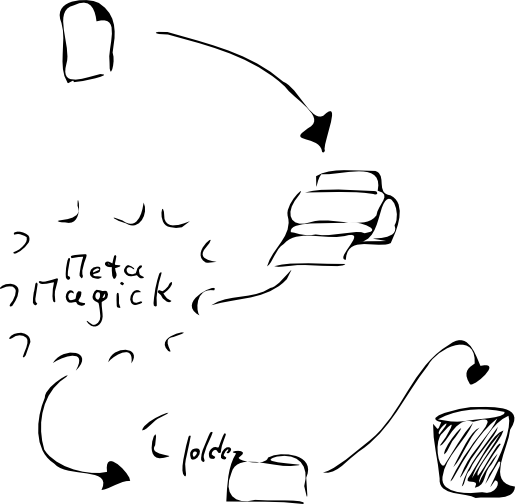

The main catch-folder for scanned documents is processed every two hours and searched for files matching a certain pattern. If that pattern is matched, the file is moved to its designated location and permanently stored there.

On the input side my scripts now also support grey-scale and color scans if necessary. Real life has shown that certain design patterns on documents are hard to read in black and white mode. A minority of creditors for example introduces gray background on parts of the document to highlight or visually separate information. This either shows not at all or as black in a black/white-scan. If the gray-value passes a certain threshold, the information within that area is indistinguishable from the area itself.

About metadata¶

I basically stopped editing the meta-data within the PDF document. The work was pretty easy to do and some support scripts took most of the load. However: I cannot recall a single instance where I had usage for any of the meta-information stored within the PDF document. Mostly the information stored in the filename was enough to identify a document and to handle it. Since there was no benefit I gave up on the dream of a neatly tagged information store for my PDF documents and only use the filename for processing.

When it comes to the elements of the filename, this basically still the same:

<YYYYmmdd>-<author_name>-<documentype>[<subject>][-<keywords>].pdf

There are still not many rules here. As long as the document matches this structure, I consider it valid:

The document date is the date on the document in the format 19700101. This can be tricky sometimes when handling manuals which not necessarily have a specific release-date. When that case occurs, I usually make something up.

The author name is string of the author of the document. In most cases these are companies, so usually rather short. Multiple words are separated with underscores (_).

The document-type is one of those:

avt, kon, con: Contracts. In multiple languages.

doc: The default.

bil, tic: Tickets, in multiple languages.

fak, rec, inv: Invoices, in multiple languages.

ord: Orders of something

odb: Order confirmations.

kvi: Receipts. This could be rec as well, but would then conflict with the German abbreviation for invoice.

til: Offers

inf: The other default document. Something that can slightly be classified as an information notice but does not fit any other category.

The subject is optional. If it’s an invoice and it has an invoice number, I take that. Same for orders and other short subjects. Otherwise I use the keywords.

The keywords are optional as well. These contain usually the general topic of the document, articles purchases or listed and similar content.

The processing¶

The folder structure as describes in 2014 is still in place. Recently I added a minor detail where each authors sub-folder also can contain subdirectories based on the document type.

Folder structure¶

So I went from this:

/-

| homefolder

- author_1

- author_2

- author_3

- author_4

- author_5

- ...

To this:

/-

| homefolder

- author_1

- fak

- doc

- author_2

- rec

- ord

- author_3

- inf

- author_4

- kvi

- author_5

- avt

- ...

The reason for this is that I maintained multiple sources of where documents where stored.

Documents like invoices, offers, etc where classified to a certain author. That worked great.

Documents like receipts however, which I mainly use for warranty-reasons where piled up in a different location, unsorted and uncategorised. That made it easy to look for a receipt, where I did not have to remember where I bought a certain product or service. But it broke the whole concept of storing it a specific location.

Manuals were also some kind of a beast to handle. It has a certain logic to it to store manuals in one place (as you would throw the physical ones in a big box in case you need them later). But this also broke up the one-storage-approach.

Those are now integrated into the authors directory structure and sorted by sub-folders by document type. This is a bit contrary to what I wrote in 2014 where I decided the other way, but over the years there are now enough documents to handle.

Sorting¶

The script mentioned in 20214 for sorting the files has been completed. It now processes an inbox-like directory with all kinds of files every two hours.

Every file that matches the filename-syntax mentioned above is then stored in a destination folder under the author’s name and document type.

Finding stuff¶

The little script finddoc from the 2014 article is still in place. But it is not that quick anymore. For searching all 6736 pdf-documents for a certain string it takes about 7 seconds. When you look closely, you see that the script is actually searching through all files in the given location. That number would be 38392. Sadly it had no effect limiting the find-command to only PDF documents.

At one point I will consider using locate/plocate and the little database behind it for this instead. Quick tests while writing this article brought the time down to 0.3 seconds instead.

With this in mind, I am still happily processing my documents and sorting my stuff. Thanks for asking.